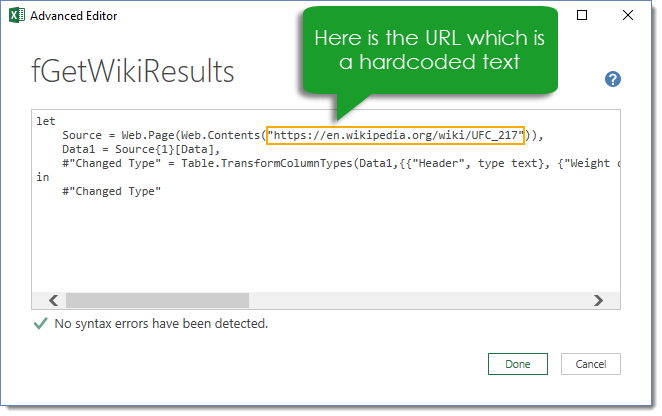

# Parse the string into a datetime object with lubridate # The status information is this time a tag attribute Because time information not only appears in the reviews, you also have to extract the relevant status information and filter by the correct entry. In general, you look for the most broad description and then try to cut out all redundant information. The datetime information is a little trickier, as it is stored as an attribute. To test the function, you can load the starting page using the read_html() function and apply the function you just wrote: first_page % The last part of the function simply takes the correct item of the list, the second to last, and converts it to a numeric value. The step specific for this function is the application of the html_nodes() function which extracts all nodes of the pagination class. # The second to last of the buttons is the one A function that takes the raw HTML of the landing page and extracts the second to last item of the pagination-page class looks like this: get_last_page % You can see that all of the page button information is tagged as 'pagination-page' class. You can search for the number '155' to quickly find the relevant section. After a right-click on Amazon's landing page you can choose to inspect the source code. This will return a list of the attributes, which you can subset to get to the attribute you want to extract. For the cases where you need to extract the attributes instead, you apply html_attrs(). To extract the tagged data, you need to apply html_text() to the nodes you want.

The output will be a list of all the nodes found in that way. To extract the relevant nodes from the XML object you use html_nodes(), whose argument is the class descriptor, prepended by a. You need to supply a target URL and the function calls the webserver, collects the data, and parses it.

To convert a website into an XML object, you use the read_html() function. To get to the data, you will need some functions of the rvest package. The landing page URL will be the identifier for a company, so you will store it as a variable. This is purely for demonstration purposes and is in no way related to the case study that you'll cover in the second half of the tutorial.

# General-purpose data wranglingĪs an example, you can choose the e-commerce company Amazon. Create a Scraping Functionįirst, you will need to load all the libraries for this task. Your goal is to write a function in R that will extract this information for any company you choose. On Trustpilot a review consists of a short description of the service, a 5-star rating, a user name and the time the post was made. With these tools at hand, you're ready to step up your game and compare the reviews of two companies (of your own choice): you'll see how you can make use of tidyverse packages such as ggplot2 and dplyr, in combination with xts, to inspect the data further and to formulate a hypothesis, which you can further investigate with the infer package, a package for statistical inference that follows the philosophy of the tidyverse.Then, you'll see some basic techniques to extract information off of one page: you'll extract the review text, rating, name of the author and time of submission of all the reviews on a subpage.You'll first learn how you can scrape Trustpilot to gather reviews.More specifically, this tutorial will cover the following: You will find that TrustPilot might not be as trustworthy as advertised.

URL EXTRACTOR FROM MULTIPLE WEBSITE HOW TO

In this short tutorial, you'll learn how to scrape useful information off this website and generate some basic insights from it with the help of R.

Trustpilot has become a popular website for customers to review businesses and services.

0 kommentar(er)

0 kommentar(er)